Acquiring Object Experiences at Scale

The following Great Innovative Idea is from John Oberlin, Maria Meier, Tim Kraska, and Stefanie Tellex in the Computer Science Department at Brown University. Their Acquiring Object Experiences at Scale was one of the winners at the Computing Community Consortium (CCC) sponsored Blue Sky Ideas Track Competition at the AAAI-RSS Special Workshop on the 50th Anniversary of Shakey: The Role of AI to Harmonize Robots and Humans in Rome, Italy. It was a half day workshop on July 16th during the Robotics Science and Systems (RSS) 2015 Conference.

The Innovative Idea

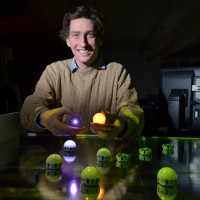

Baxter is a two armed manipulator robot which which is gaining popularity in the research and industrial communities. At the moment, there are around 300 Baxters being used for research around the world. In our paper we proposed a wide scale deployment of our recent software Ein, which runs on Baxter, to build and share a database which describes how to recognize, localize, and manipulate every day objects. If all 300 research Baxters ran Ein continuously for 15 days, we could scan one million objects. Robot time is valuable. We would not ask the research community to sacrifice their daylight hours on our project, so we designed Ein to run autonomously. Running Ein, Baxter can scan a series of objects without human interaction, so we envision that a participating lab could leave a pile of objects on a table next to their Baxter when they leave the lab for the night, returning the next morning to find the objects scanned and put away. With this level of automation, the human burden is transferred from operating the robot to finding the objects, a substantially less tedious task. The Million Object Challenge is our effort to collaborate with the broader Baxter community in order to scan and manipulate on million objects.

Impact

Big data has a potential impact on every research community. Robots have been manipulating objects for a long time, but until recently this has involved a lot of manual input from a human operator. Ein enables Baxter to collect images and 3D data about an object so that it can tell it apart from other objects, determine its pose, and attempt to grasp it. Beyond this, Ein uses feedback from interacting with the object to adapt to difficult situations. Even if an object has surface properties which make it difficult to image, or physical properties which make initial grasp attempts unsuccessful, Baxter can practice localizing and manipulating the object over a period of time and remember the approaches which were successful. This autonomous behavior allows the collection of data on a whole new scale, opening up new possibilities for robotic research. Successful methods in machine learning (such as SVMs, CRFs, and CNNs) are powerful but require massive amounts of data. Applying these methods to their full capability is next to impossible when data is collected arduously by humans. By collecting data on a large scale, we can begin to tackle category level inference in object detection, pose estimation, and grasp proposal in ways that have never been done before.

Other Research

Stefanie heads the Humans to Robots Laboratory in the Computer Science department at Brown. The Million Object Challenge constitute only one of three main efforts being undertaken there. The second effort is the Multimodal Social Feedback project, which was spearheaded by Miles Eldon (now graduated) and is being actively developed by Emily Wu, both advanced undergraduates at the time of their work. The Social Feedback Project seeks to coordinate human and robot activities by allowing both parties to communicate with each other through speech and gesture. This allows a human to connect with Baxter using the same multimodal channels that they would use to communicate with a fellow human. Social feedback allows the robot to communicate with humans, and the results of that communication are fed to Ein, where it is used to specify interactions with objects. Every object scanned for the Million Object Challenge is another object which can be included in human to robot communications.

The third effort is called Burlapcraft, and is carried by undergraduate researcher Krishna Aluru and postdoctoral researcher James MacGlashan. Krishna created a mod for the popular and versatile game Minecraft which allows the application of James’ reinforcement learning and planning toolkit BURLAP within Minecraft dungeons. Burlapcraft has direct experimental applications within Minecraft, but also enables the collection of data which can be applied to other domains.

Researcher’s Background

Stefanie and John have both been fascinated with the idea of intelligent machines for their entire lives. Whereas Stefanie has sought to build a machine capable of holding a decent conversation, John has focused on the more primitive skills of sight and movement. These complementary priorities come together in their multimodal research, treating the robot as an entire entity.

Stefanie has a strong background in Computer Science and Engineering from MIT, where she has earned multiple degrees including her doctorate. Now she is an assistant professor in the Computer Science Department at Brown, where she continues her award winning work. John has studied Mathematics and Computer Science at FSU, UC Berkeley, University of Chicago, and Brown, where he is working on his doctorate.

Link

Stefanie’s Website- h2r.cs.brown.edu