Smartmedia: Adapting Streamed Content to Fit Location and Context

The following Great Innovative Idea is from Yaron Kanza (AT&T Labs-Research), David Gibbon (AT&T Labs-Research), Divesh Srivastava (AT&T Labs-Research), Valerie Yip (AT&T Labs-Research), and Eric Zavesky (AT&T Labs-Research). The team’s paper, Smartmedia: Locally & Contextually-Adapted Streaming Media, won first-place in the Computing Community Consortium (CCC) sponsored Blue Sky Ideas Track Competition at the 28th ACM SIGSPATIAL International Conference on Advances in Geographic Information Systems.

The Idea

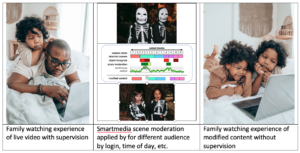

Streaming media is gaining popularity, with numerous new services for video on demand and live broadcast. Often, streaming media is consumed on mobile devices, like smartphones and tablets, in different locations and contexts. In the novel approach we present, named Smartmedia, the streamed content is adapted to the location and the context, in real time, to create a new type of user experience. The following examples illustrate the potential of Smartmedia. (1) Video scenes that are unsuitable for public places could be adapted when the content is consumed in a public location like a school or a train station. (2) Content could be personalized, e.g., for a user that prefers not to watch violent content or is in the presence of people who would prefer not to be exposed to violence, a scene in a nature film where a lion catches a gazelle would be pixelated, skipped, or replaced by a non-violent scene. (3) The length of a video that is streamed to a train passenger could be modified according to the length of the ride, e.g., by automatically slightly changing the viewing speed. (4) The content could be adjusted based on emotional responses extracted from facial expressions of the viewer, subject to opt-in of relevant privacy policies. These adaptations provide content personalization and would allow users to view content safely, in a variety of situations, rather than avoid watching the content altogether.

In Smartmedia, videos are partitioned into segments, alternative segments are created based on needs and demand, and appropriate segments are selected according to the context. In this work we explain how geofencing and geoblocking can be combined with real-time segment selection to create the new technology of contextually-adapted streaming media. In addition to content personalization, this combination can be used for (1) preventing password sharing in a non-intrusive way, (2) hyperlocal geoblocking, to facilitate copyright protection, and (3) seamlessly and safely transferring videos between nearby screens, without sharing account details.

Impact

Streaming media is revolutionizing many areas by enabling impactful new services and applications, including consumption of video and music on demand, remote learning, remote conferences, streaming news, live broadcast of events, dissemination of videos in social media, and so on. In all these cases, there is a big potential to adaptation abilities that would change the content and the viewing mode to be suitable for the viewers and the context. To illustrate the novelty and the potential impact, consider the huge difference between static Web pages and Web apps. Unlike a static page, an app can adapt content to the environment and respond to interactions. Our main goal is to enable a similar revolution in streaming media.

The large effect of streaming media on the lives of many people and the large economic market of streaming-media services make any revolution in this area impactful, with a potential to influence billions of people and large organizations, including users, streaming-media services, developers of streaming media applications, content providers and many others.

Other Research

We conduct research in a variety of areas, including data management, data analytics, data quality, video analytics, geospatial data management, privacy protection, network planning and more. Part of our research is industrial research, where we develop and test solutions that support the needs of AT&T. Other studies that we conduct are more futuristic and innovative, where we tackle problems that are more visionary or relate to technological problems that might occur in the future due to the rapid evolution in data management technologies.

Yaron Kanza is a subject matter expert in databases, spatial data management, spatial applications, graph databases, and search technologies. David Gibbon is a subject matter expert in video processing, video search, machine learning, computer vision systems and color imaging processing. Divesh Srivastava is a subject matter expert in data management, database systems, data integration, data quality, data privacy, data streams and more. Eric Zavesky is a subject matter expert in passive visual event analytics, interactive video indexing and retrieval, multimedia content processing, multimodal interfaces, machine learning, biometrics, data mining, and natural language processing.

Researcher’s Background

- Yaron Kanza is a researcher at AT&T Labs-Research. He received his Ph.D. from the Hebrew University of Jerusalem and conducted a postdoctoral fellowship at the University of Toronto. Before joining AT&T he was a faculty member at the Computer Science Department of the Technion – Israel Institute of Technology, and a visiting Asst. Professor at Cornell Tech. He is an Associate Editor of the ACM Transactions on Spatial Applications and Systems.

- David Gibbon is the head of the Video and Machine Learning Research Department in AT&T’s Chief Data Office where his focus is on creating next-generation advertising and media technologies. He received his MS in Electronic Engineering from Monmouth University and BS in Physics from the University of Bridgeport. While at AT&T, he was recently an Adjunct Professor at Columbia University, he serves on the editorial board of Multimedia Tools and Applications, and he is a senior member of the ACM and IEEE.

- Divesh Srivastava is the head of the Database Research Department in AT&T’s Chief Data Office (CDO) org. He received his Ph.D. from the University of Wisconsin, Madison, and his B.Tech from the Indian Institute of Technology, Bombay. He is a Fellow of the ACM, the Vice President of the VLDB Endowment, and on the Board of Directors of the Computing Research Association. He has presented keynote talks at several conferences. His research interests and publications span a variety of topics in data management.

- Valerie Yip is a student of Computer Science at the University of California, Berkeley. During the summer of 2020 she was a summer intern at AT&T Labs.

- Eric Zavesky is a Lead Inventive Scientist for the Video and Machine Learning Research Department in AT&T’s Chief Data Officer. At AT&T he investigates guided interactions with content consumption using automated content analysis and understanding and situational context determination for networks applied to video systems for entertainment and education. He received his PhD and MS in Electrical Engineering from Columbia University in New York and BS from The University of Texas and joined AT&T after three fantastic internships studying problems in the fields of content analysis, HCI, and XR applications.

References

- https://about.att.com/innovation/ai_and_data_science – general technology and data science

- https://about.att.com/innovation – more general innovation link

- https://about.att.com/innovation/technology_research_museum – technology and research