Student Researchers Ask How Secure We Feel About Internet Security

Originally posted by Bucknell University

BY MATT HUGHES

You’ve probably noticed how well your internet browser has gotten to know you.

Ever-advancing advertising algorithms serving up targeted ads, not to mention data breach after data breach, have made many internet users more attuned than ever to how little privacy we often have on the internet. But when it comes to information that we really want to protect, how many of us can say that we understand the steps that companies we interact with online are taking to safeguard our privacy?

That question is at the heart of a new study published by two Bucknell University undergraduates and two computer science professors that blends computing with psychology to examine how internet users feel about privacy-protecting tools.

“A big issue with data privacy is that nobody really understands or has a very clear grasp of what a private context online really means,” said Stephanie Garboski ’18, one of the student researchers. “Our research is about trying to make people comfortable with the protocols involved.”

In May, Garboski and co-researcher Brooke Bullek ’18 presented and published their study at the most prestigious international conference in the field of human-computer interaction, ACM-CHI, where they were among only a handful of undergraduates presenting alongside university faculty and researchers for companies like Microsoft and IBM. Bullek was also awarded an $8,000 Scholarship for Women Studying Information Security from the Computing Research Association due in large part to the project.

Another Bucknell student, Jordan Sechler ’19, presented a poster at the same conference in Denver.

The study Bullek and Garboski undertook with professors Evan Peck and Darakhshan Mir, computer science, was an experiment to see how comfortable users were sharing their data using one of the better tools out there for ensuring data privacy. Called differential privacy, it involves adding inaccurate information, or “mathematical noise,” to data collected from users. That noise can be accounted for with statistical analysis to reveal accurate trends in aggregate data, but adding it means that information can’t be traced back to a user with certainty.

Peck likens the tool to asking a classroom of students to answer the question “Did you cheat on your exam?” by flipping a coin. The students who flip heads must answer yes, regardless of the truth, while those who answer no must answer truthfully.

“The instructor can figure out what percentage of people in the classroom probably cheated by using probability, but it protects people individually,” Peck said. “It lets you understand statistics not on a per-person basis but on a population level. That’s why big companies like Apple are really interested in this, because they’re trying to get population-level statistics without compromising individual privacy.”

In their own study, Bullek and Garboski asked more than 200 respondents recruited online to answer a dozen highly personal and potentially embarrassing questions, including “Have you ever cheated on a college exam?” and “Have you used recreational drugs in the last six months?” Respondents were told they were testing an experimental Facebook feature that would post their answers to their Facebook wall in order to “invite them to think about more open, vulnerable contexts,” Bullek explained.

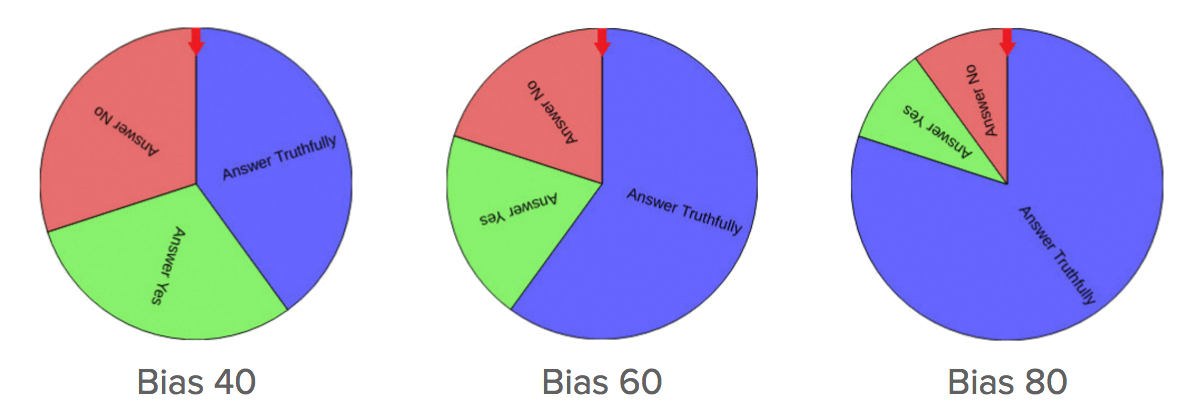

The three spinners used in the experiment.

Before answering each question, participants were made to spin a wheel that instructed them to either answer the question truthfully or to answer with either yes or no, regardless of the truth. Three different spinners were used, one compelling participants to answer a question truthfully only 40 percent of the time, another 60 percent of the time, and the third 80 percent of the time. || Click here to spin the wheel.

Afterwards, Bullek and Garboski asked the participants which spinner they felt most comfortable using. While the results confirmed what the researchers predicted, that most participants would choose the spinner requiring them to tell the truth 40 percent of the time because it offered the most privacy, they also found that a significant subset of respondents chose the 80-percent spinner, which required them to tell the truth most often. The reason: they didn’t feel comfortable lying.

“The word lying or lie came up a lot,” Bullek said. “People were equating this mathematical noise that they’re adding, which is supposed to protect them, with being deceptive. It revealed that some people may have a fundamental misunderstanding of how differential privacy and its variants work.

“That’s why we became interested in this whole investigation,” she continued. “We thought that people might not understand how adding mathematical noise isn’t a bad thing — that it’s actually a good way to mitigate risk while still providing beneficial information to the researchers and companies collecting information.”

Understanding which tools companies use to protect user privacy is important because not all privacy tools are created equal. For some companies, anonymizing data involves simply removing the names of users from the data they collect, but that approach doesn’t provide adequate privacy, the researchers noted. Bullek pointed to a study by data-researcher Latanya Sweeney, which was able to identify individuals with around 90 percent certainty using three pieces of information: gender, date of birth and ZIP code.

“They’re the kind of things that you wouldn’t think twice about giving up while online shopping,” Bullek said. “But they’re very unique when you put them together.”

The Bucknell researchers hope their study is a first step toward encouraging companies to be more forthcoming in explaining the steps they take to protect user data, rather than burying that explanation in submenus and user-service agreements. If they do, the researchers believe many internet users might feel less anxious about sharing information when they know the right tools are being used for their protection.

“Some companies do try to help you protect your privacy, but it happens through a black box, using these kind of magic words that no one understands,” Peck said. “As users, we’re forced to make a decision about whether to use these privacy tools or not, and we have no idea what it means. We’re trying to give people an example of what’s going on and get a sense of how they feel about it.”

These students participated in the Collaborative Research Experience for Undergraduates (CREU) CRA-W program from 2016-17.