Whistling Past the Graveyard: What the End of Moore’s Law Means to All of Computing

Is “Moore’s Law” ending? If so, what does this mean to all of us in he field of computing? These questions were discussed at a July 2016 panel at the Computing Research Association’s Conference at Snowbird organized by Conte and Margaret Martonosi of Princeton. The panel included a technologist (Paolo Gargini, Intel fellow-emeritus), three computer architects (David Brooks of Harvard, Mark D. Hill of Wisconsin-Madison, and Tom Conte of Georgia Tech), and a quantum computer scientist (Krysta Svore of Microsoft Research).

Is “Moore’s Law” ending? The answer depends on what you think Moore’s Law means. First, if Moore’s Law means, as the popular press suggests, that “computer performance doubles every 18 months,” then yes it has ended. Second, if it means that, “the economics are such that the number of transistors on an integrated circuit for a given cost can double about every two years,” then Moore’s Law is alive, but there are power issues with using all of the transistors. Third, if Moore’s Law allows vertical scaling—as done in FLASH—then Gargini, especially, is optimistic about Moore’s Law.

If so, what does this mean to all of us in the field of computing? Surprisingly given so many experts in disparate fields, there was a consensus: the way we compute needs to change fundamentally if we want computing performance to continue its historic exponential growth. Moreover, unlike in the past where the changes could be hidden behind the scenes by architects, the changes coming will ripple throughout all of computing: from algorithms, to systems, to programming languages, on down through architecture to electron device selection.

What might this “brave new world” look like? Krysta Svore presented how quantum computing is becoming practical. She estimated within the decade we will have enough qubits to solve important problems that we cannot solve today using these devices. Meanwhile, Microsoft Research has created tools that we can use today to program these systems of tomorrow.

David Brooks noted that in today’s smartphones we use fixed-function units to overcome the power limitations of the underlying technology. For example, we have specialized, non-programmable hardware units that perform one task—codecs, modems, etc.—more efficiently than any programmable solution could. When you cut out programmability, you remove the wasted power needed to fetch and decode a program’s instructions.

Mark D. Hill reminded the audience of the 1000-fold (cost) performance gains from 1980-2000 that were done a manner hidden from software. He argued that another 1000-fold is in progress 2000-2020 but that this is software-visible via multi-core, heterogeneity, and (data center) clusters. These performance gains mattered—not to make word processing faster—but to facilitate new techniques like deep learning. To the extent that future applications TBD require more (cost) performance, it will require more cooperation up and down the software-hardware stack enable great efficiency, at least until radically new technology becomes available.

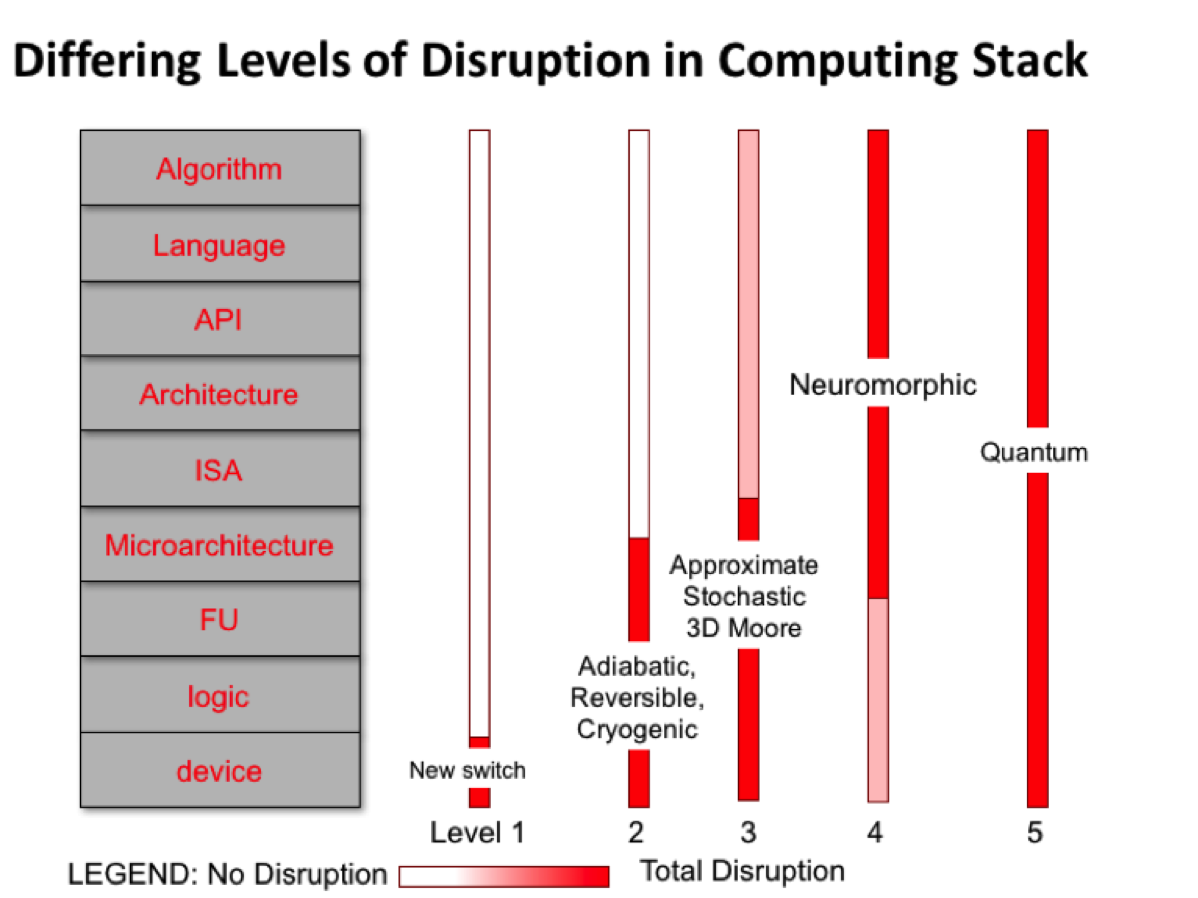

Tom Conte made the point that some solutions on the horizon will cause massive disruption in the layers of abstraction of the computing stack. Others, however, will not. He presented the following to illustrate his point:

Click to enlarge and see full presentation.

The new switch of level 1 doesn’t appear to be possible because of the limitations expressed by Gargini. Levels 2-5 require disruptions at varying degrees. Quantum, for example, disrupts everything from algorithms on down. Neuromorphic computing currently runs on CPUs and GPUs, but it does so highly inefficiently. Google’s AlphaGo example had a GPU/CPU cluster beating a human master at Go 4 out of 5 times. But the cluster consumed 1.2 kilowatts compared to the 10’s of watts consumed by the human brain of its opponent. True neuromorphic needs to run on “native hardware,” hardware that will likely be made from analog circuitry rather than digital.

What is the future of computing? It is going to be significantly different from today. All of computer science and engineering needs to react to these changes now. There is much research to do, of course. But more pressing is how do we educate students today on computing when the fundamentals of the field are changing so rapidly?